Why are similar questions asked in a different way?

This is common standard in good psychometric practice to ensure reliability of the responses.

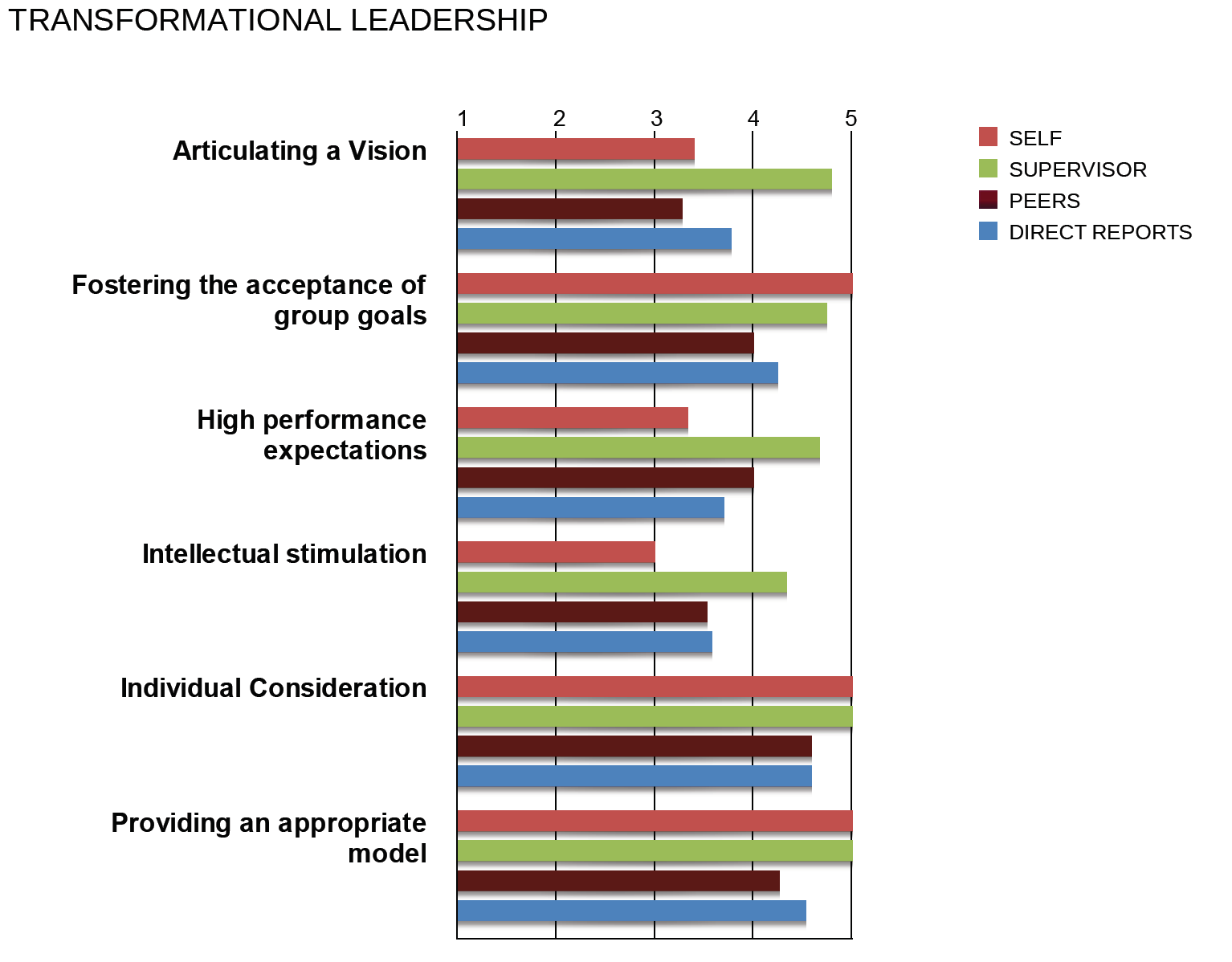

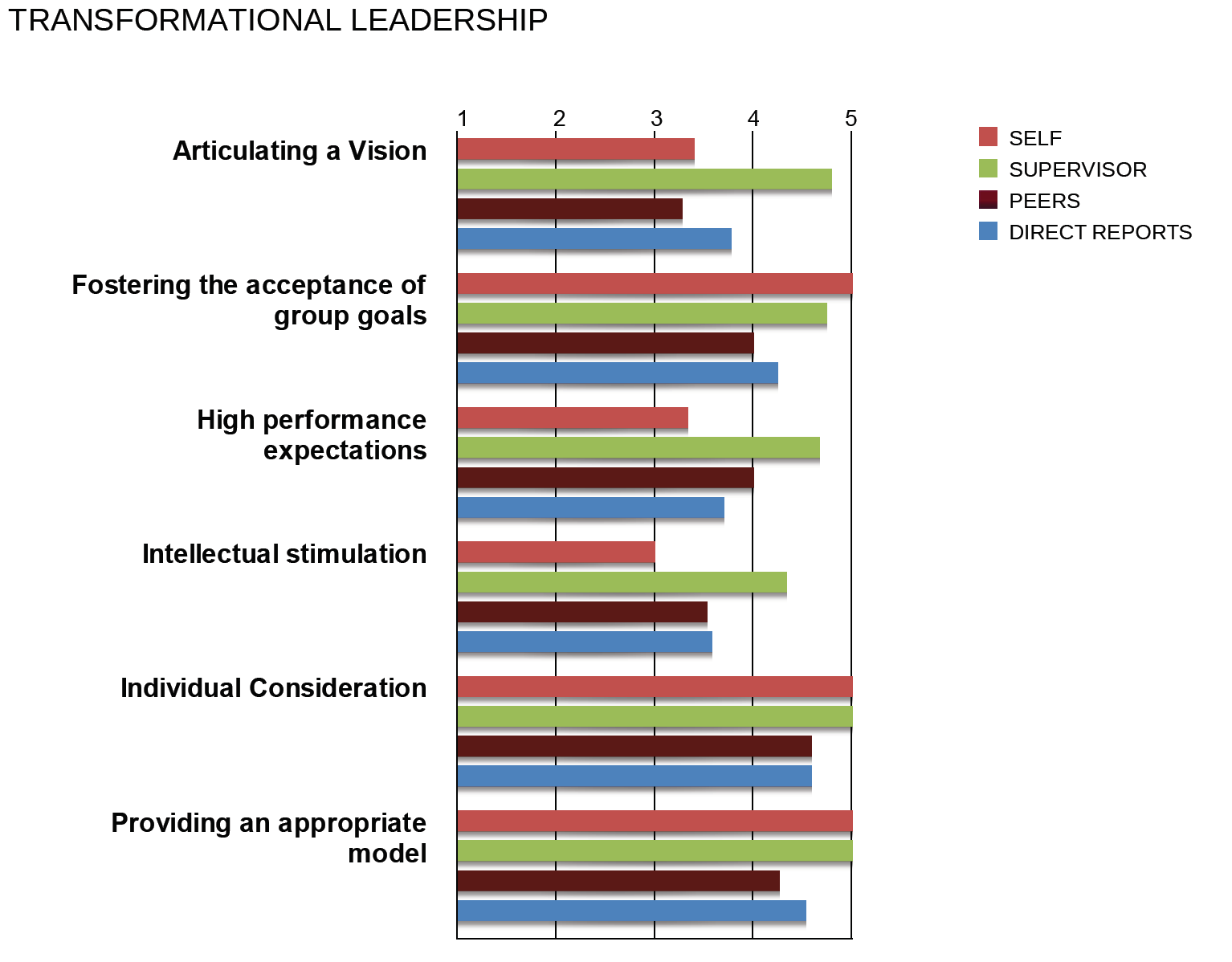

How does the summary feedback that depicts the average of evaluations look like in Nexion's evidence-based feedback report?

Extract of our evidence-based feedback report:

Example:

Example:

Sound psychometric properties

As outlined in the following text that has been written by Prof. Antonakis, a psychometric measure has sound psychometric properties if it measures what it is supposed to. Also, before one can propose that a new measure offers something different or is significantly better than measures that have an established history it is important that the proponents of the new measure demonstrate it is reliable and valid (for detailed accounts regarding validity and reliability see Carmines & Zeller, 1979; Kerlinger, 1986; Nunnally & Bernstein, 1994).

Reliability refers to the extent to which a test's indicators are internally cohesive and measures a construct (e.g., leadership behaviours, traits, ability) consistently (Carmines & Zeller, 1979). A measure can be reliable, but could be reliably me assuring the wrong thing and the measure could be consistently off target. Thus, a reliable measure does not imply that the measure is valid.

Inter-rater reliability (variation in measurements when taken by different persons).

Test-retest reliability (variation in measurements taken by a single person or instrument on the same item and under the same conditions at a different time)

Inter-method reliability (variation in measurements of the same target when taken by a different methods or instruments).

Internal-consistency reliability (assesses the consistency of results across items within a test).

Validity indicates "the extent to which any measuring instrument measures what it is intended to measure" (Carmines & Zeller, 1979, p. 17) and when demonstrated, suggests that the measure does what it should do (i.e., is on target, consistently).

Construct validity (Does the measure relate to a variety of other measures as specified in a theory?).

Criterion validity (Does the measure predict or explain variance in outcome measures?).

Concurrent validity (Is it associated with pre-existing tests/indicators that already measures the same concept).

Predictive validity (Does it predict a known association between the construct you are measuring and something else?).

Discriminant validity (Is the measure correlated or uncorrelated to competing measures?).

Convergent validity (Are different measures of the same construct strongly correlated with each other?).

Incremental validity (Does the measure explain unique variance in dependent outcomes beyond the variance that this accounted for by competing constructs?).

Reliable, but not valid:

Reliable and valid:

Not reliable and not valid:

Example:

Example:

Reliable and valid:

Reliable and valid: Not reliable and not valid:

Not reliable and not valid: